The MIRI Framework

Process Overview

The process begins with a noisy initialization. Each loop minimizes the Mutual Information between the data and the missingness mask.

To achieve this, we train a Rectified Flow defined by an ODE that transports current estimates toward a distribution where missing patterns are unpredictable.

This cycle continues until the imputed values are statistically indistinguishable from the observed data.

Observed Data & Mask

Random Noise Fill

Minimize Mutual Information

Rectified Flow Transport

Update Imputation

Final Imputed Data

Experimental Results

Competitive performance on Tabular and Image Benchmarks

Quantitative results on CIFAR-10

Methods are evaluated at three levels of missingness (20%, 40%, 60%) using FID, PSNR, and SSIM. The best results are highlighted in bold.

| Method | 20% Missingness | 40% Missingness | 60% Missingness | ||||||

|---|---|---|---|---|---|---|---|---|---|

| FID ↓ | PSNR ↑ | SSIM ↑ | FID ↓ | PSNR ↑ | SSIM ↑ | FID ↓ | PSNR ↑ | SSIM ↑ | |

| GAIN | 164.11 | 21.21 | 0.7803 | 281.62 | 16.20 | 0.5576 | 285.53 | 11.99 | 0.2933 |

| KnewImp | 153.09 | 18.84 | 0.6463 | 193.68 | 15.81 | 0.4740 | 264.40 | 14.04 | 0.3317 |

| MissDiff | 90.51 | 22.29 | 0.7702 | 129.84 | 19.65 | 0.6648 | 197.91 | 16.78 | 0.4989 |

| HyperImpute | 8.92 | 34.09 | 0.9750 | 65.01 | 23.22 | 0.7931 | 130.36 | 20.17 | 0.6533 |

| MIRI (Ours*) | 6.01 | 32.29 | 0.9736 | 27.53 | 27.14 | 0.9126 | 68.58 | 23.22 | 0.8063 |

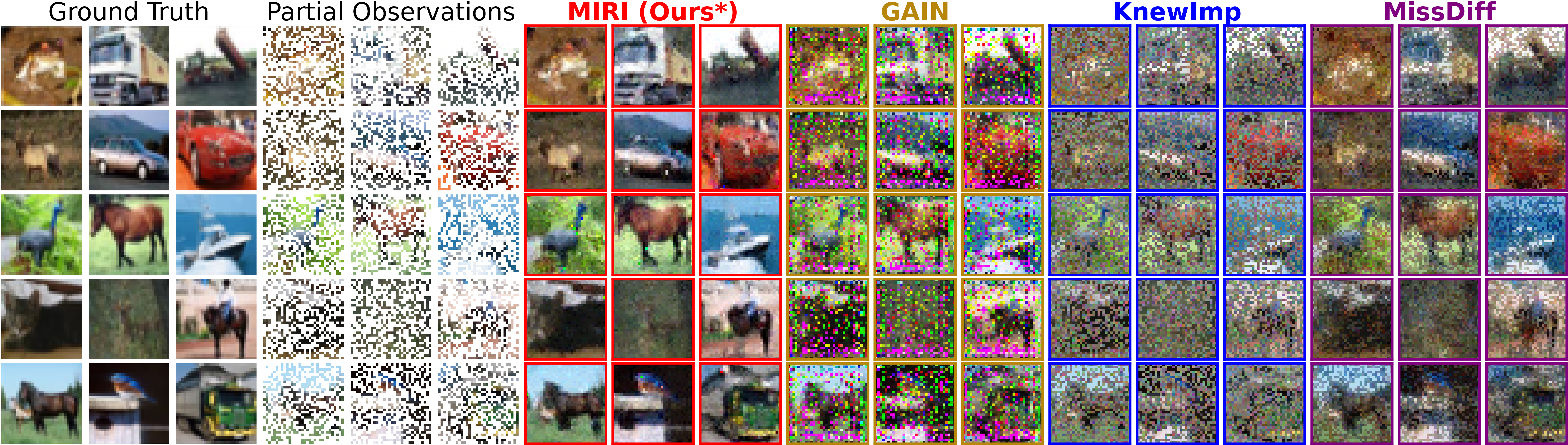

CIFAR-10 Image Imputation (60% Missingness)

15 uncurated 32×32 CIFAR-10 images and their imputations. Pixels are removed from all RGB channels.

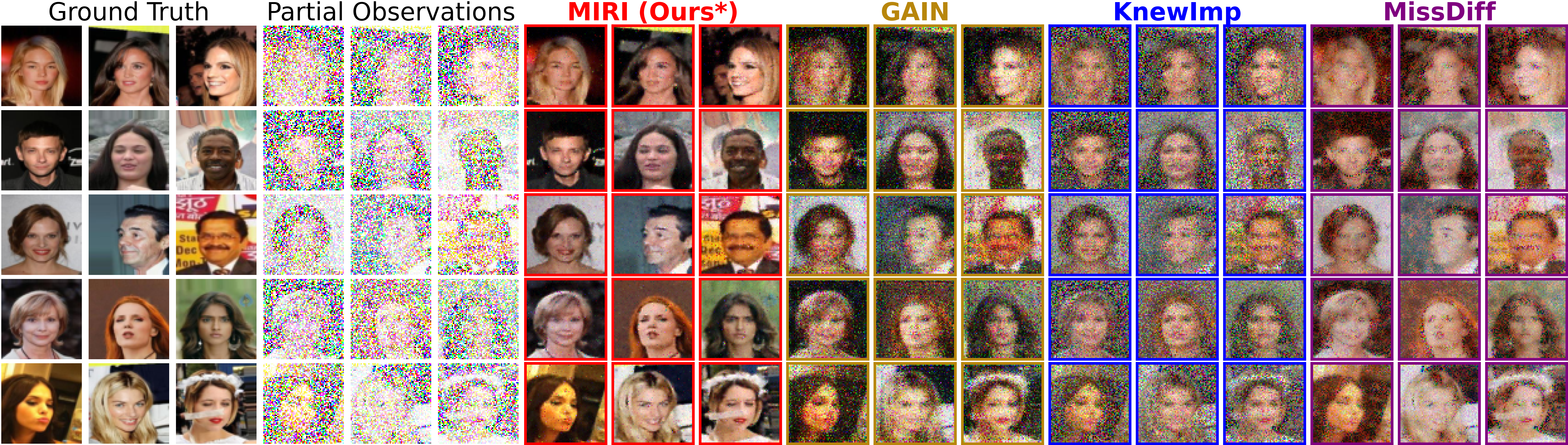

CelebA Image Imputation (60% Missingness)

15 uncurated 64×64 CelebA images and their imputations. Pixels are removed from each RGB channel independently.

Key Observations

- Superior reconstruction quality: MIRI consistently produces sharper images with better preservation of high-frequency details (e.g., hair textures, facial features) compared to baseline methods.

- Reduced artifacts: Unlike GAIN and other GAN-based methods that often produce blurry or hallucinated features, MIRI maintains realistic textures while avoiding over-smoothing.

- Robust across missingness levels: MIRI demonstrates strong performance across all three missingness rates (20%, 40%, 60%), with particularly notable improvements at higher missingness levels.

Citation

@inproceedings{yu2025missing,

title={Missing Data Imputation by Reducing Mutual Information with Rectified Flows},

author={Yu, Jiahao and Ying, Qizhen and Wang, Leyang and Jiang, Ziyue and Liu, Song},

booktitle={Proceedings of the 39th Conference on Neural Information Processing Systems (NeurIPS)},

year={2025}

}